It’s that time of year again where we put on our sparkle, don our Christmas cracker paper hats, and head out of the office to get to know each other better.

There’ll be heartfelt thanks and lots to learn as we find out what everyone chose for a secret Santa gift. And that’ll be important. Not just to a jolly Christmas, but also to how well 2024 comes out.

Cheesy as it is, to get the best outcomes, we all need to be singing from the same hymn sheet.

Efforts to improve the effectiveness of marketing succeed when they put people at the heart of the programme.

And, although it happens more quietly, the opposite is true too. When effectiveness programmes fail, it’s nearly always because the relevant people weren’t properly included.

Effectiveness programmes fail if they aren’t people-centric

Do your effectiveness analysis findings get implemented? If not, why not? What needs to improve? That’s a hard set of questions to ask of a CMO or marketing director, but we at magic numbers asked them anyway when we interviewed 11 marketing directors and CMOs.

What we discovered is that senior marketers, unbeknownst to each other, are often in the same boat. They undertake analysis and get recommendations, but then struggle to implement them and reap the rewards.

And the reason why is all to do with people and change.

Sometimes the blocker is a person whose job is diminished by the needed change. At other times it’s a suspicious numbers person, often in finance. Or there’s a co-ordination failure, where the needed change involves two different departments that don’t typically work together.

The below quote – from an anonymous CMO in the research – is a typical description of what happens:

“If this model is telling them things are great, then you know, they’re a hero, everything’s great, they don’t need to change anything. But, if it is the other way around, suddenly there’s a raft of reasons why they won’t change things. They say they don’t understand what the model is.”

People who don’t agree with the change being recommended question the analytics. They say they don’t understand it and suggest that maybe, it’s wrong. Then they pull out some other research that says the opposite.

By then, the effectiveness research provider, having delivered their PowerPoint deck, is long gone, and there’s no-one at hand to resolve the seeming contradiction between different sources of research.

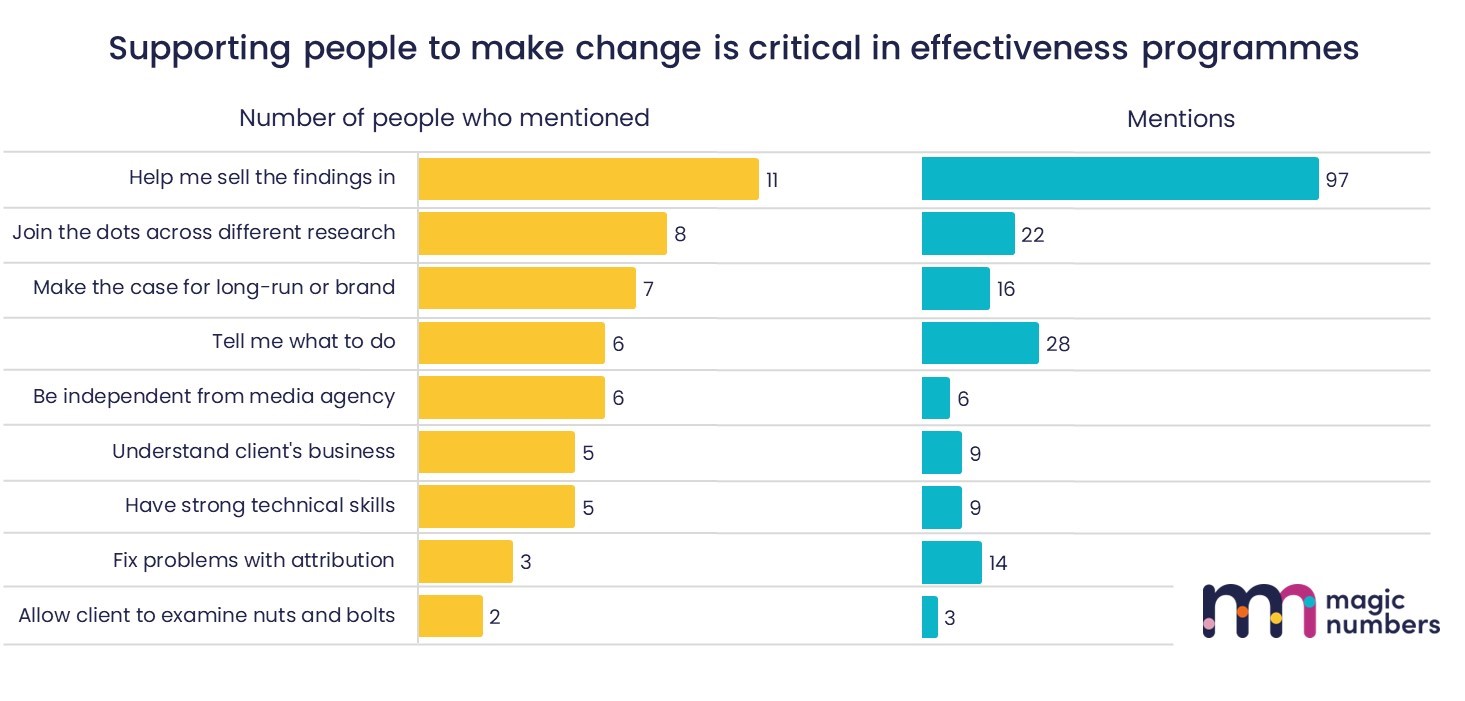

We asked the senior marketers what analytics providers could or should do more or better. By far the most mentioned was supporting people to make change happen. They said “help me sell it in” over and over again in 100 different ways.